背景 使用kube-prometheus项目在kubernetes集群里部署了prometheus监控,中间件的独立部署在集群外的服务器上的,本来是对中间件有监控,但是这批服务器比较拉,之前经历过五台机器同时发生重启的情况,这里还是要监控mysql/redis/es的的机器。

安装 去https://github.com/prometheus/node_exporter/releases下载安装包,这里我选择下载1.8.2

1 2 3 4 cd /optwget https://github.com/prometheus/node_exporter/releases/download/v1.8.2/node_exporter-1.8.2.linux-amd64.tar.gz tar xf node_exporter-1.8.2.linux-amd64.tar.gz mv node_exporter-1.8.2.linux-amd64 node_exporter

创建一个用户用于启动node_exporter,提升安全性

1 2 3 groupadd prometheus useradd -g prometheus -s /sbin/nologin prometheus chown -R prometheus:prometheus /opt/node_exporter/

创建一个systemd文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 cat /usr/lib/systemd/system/node_exporter.service [Unit] Description=node_exporter Documentation=https://prometheus.io/ After=network.target [Service] Type=simple User=prometheus Group=prometheus ExecStart=/opt/node_exporter/node_exporter ExecReload=/bin/kill -HUP $MAINPID KillMode=process Restart=on-failure [Install] WantedBy=multi-user.target

启动

1 2 3 systemctl daemon-reload systemctl enable --now node_exporter 执行后出现Created symlink from /etc/systemd/system/multi-user.target.wants/node_exporter.service to /usr/lib/systemd/system/node_exporter.service. 说明已经添加到开机启动项里了

访问 http://127.0.0.1:9100/metrics 验证一下

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 [root@es-3 ~]# curl http://127.0.0.1:9100/metrics |head -100 % Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed 0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0# HELP go_gc_duration_seconds A summary of the pause duration of garbage collection cycles. # TYPE go_gc_duration_seconds summary go_gc_duration_seconds{quantile="0"} 5.5111e-05 go_gc_duration_seconds{quantile="0.25"} 6.2745e-05 go_gc_duration_seconds{quantile="0.5"} 6.8598e-05 go_gc_duration_seconds{quantile="0.75"} 9.7717e-05 go_gc_duration_seconds{quantile="1"} 0.000294668 go_gc_duration_seconds_sum 0.05627822 go_gc_duration_seconds_count 659 # HELP go_goroutines Number of goroutines that currently exist. # TYPE go_goroutines gauge go_goroutines 9 # HELP go_info Information about the Go environment. # TYPE go_info gauge go_info{version="go1.22.5"

采集数据 在kubernetes中的prometheus采集这几个集群外的node-exporter的数据 这里有两种方式:

方式一:使用servicemonitor

创建一个endpoint和service对象

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 --- apiVersion: v1 kind: Endpoints metadata: name: node-exporter-other namespace: monitoring subsets: - addresses: - ip: 10.251.1.51 - ip: 10.251.1.52 - ip: 10.251.1.66 - ip: 10.251.1.67 - ip: 10.251.1.68 - ip: 10.251.1.63 - ip: 10.251.1.64 - ip: 10.251.1.65 ports: - name: metrics port: 9100 protocol: TCP --- kind: Service apiVersion: v1 metadata: name: node-exporter-other namespace: monitoring labels: app: node-exporter-other prometheus.io/monitor: "true" spec: type: ClusterIP ports: - name: metrics port: 9100 protocol: TCP ---

创建servicemonitor对象

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 apiVersion: monitoring.coreos.com/v1 kind: ServiceMonitor metadata: name: node-exporter-other namespace: monitoring labels: prometheus.io/monitor: "true" spec: endpoints: - interval: 15s port: metrics path: /metrics jobLabel: node-exporter-other selector: matchLabels: app: node-exporter-other namespaceSelector: matchNames: - monitoring

执行kubectl apply -f 把这两个对象加载进入集群后,稍等片刻就可以在promehtues上查看

注意:这里的interval 时间不要写太长,比如60s,不然会导致部分语句无数据比如increase(node_network_receive_bytes_total{instance=“$instance”,device=“$device”}[1m]) ,时间间隔太长,导致数据点位不足,以至于 [1m] 时间范围内没有足够的数据点来计算速率,如果一定要写60s 那就需要把时间窗口改大一点。

方式二:修改prometheus-prometheus.yaml文件

修改prometheus的yaml文件prometheus-prometheus.yaml,添加如下参数

additionalScrapeConfigs:

该选项会把additional-configs这个serect文件中的prometheus-additional.yaml这个key的值作为配置文件加载到prometheus的配置里。

cat prometheus-prometheus.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 apiVersion: monitoring.coreos.com/v1 kind: Prometheus metadata: labels: prometheus: k8s name: k8s namespace: monitoring ....... securityContext: fsGroup: 2000 runAsNonRoot: true runAsUser: 1000 #添加这个 additionalScrapeConfigs: name: additional-configs key: prometheus-additional.yaml serviceAccountName: prometheus-k8s serviceMonitorNamespaceSelector: {} ....

additional-configs这个secret 这里通常通过文件去创建,例如我们创建一个prometheus-additional.yaml文件,然后执行:

1 2 3 # 先删除,在重新创建,更新 additional-configs secrets配置 ,Prometheus 会自动 reload kubectl delete secrets -n monitoring additional-configs kubectl create secret generic additional-configs --from-file=prometheus-additional.yaml -n monitoring

prometheus-additional.yaml文件内容为,大家可以按自己需要填写

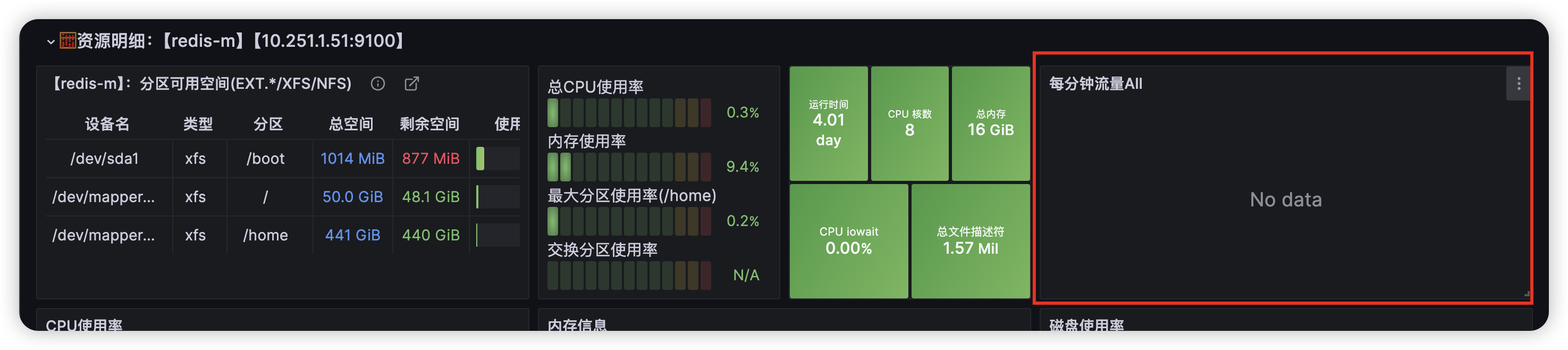

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 - job_name: "node_exporter-other" scrape_interval: 10s static_configs: - targets: ['10.251.1.61:9100'] labels: instance: redis-m - targets: ['10.251.1.63:9100'] labels: instance: redis-s - targets: ['10.251.1.64:9100'] labels: instance: mysql-m - targets: ['10.251.1.65:9100'] labels: instance: mysql-s

总结 两种方式都可以满足监控集群外的资源,不仅适用于node_exporter 还适用于监控集群外的别的资源,两种方式各有利弊,一般我个人是比较喜欢第一种,如果是比较复杂的情景的可能会采用第二种。